High-Level Design and High-Level Verification

Expectations for C++/SystemC Designs Must Be Set Properly

By Dominik Strasser, Vice President of Engineering

Not so long ago, some EDA vendors were painting a very attractive picture of chip design in the then-near future. The idea was that an architectural team would write a single description of the complete system in some high-level language, usually C/C++/SystemC, and that a new class of EDA tool would automatically partition the design into hardware and software, choosing the functionality of each to meet the project’s power/performance/area (PPA) goals. Such a tool would generate SystemVerilog RTL for the hardware, which would be turned into a chip (or chips) by logic synthesis. The tool would generate C code for the software portions, in the form of programs that could be compiled directly and run on the hardware. The system-level description would remain the golden source, and neither hardware engineer nor programmer would have to make any changes to the generated design and software. Further, virtually all functional verification would be done on the system-level model.

This was a nice vision while it lasted, but the industry has been able to take only relatively small steps toward its realization. Automatic partitioning has proven to be a very difficult problem, due in part to the different ways that software and RTL are written. As one example, hardware generally stores the least amount of data needed to get started processing. A huge amount of streaming data can be processed even though only a portion of it may be within the chip at any given point in time. The hardware micro-architecture must reflect this. Software, on the other hand, tends to keep large buffers in memory. In software, the data is generally persistent; in hardware, it’s temporal. Software also tends to regard reading and writing memory as essentially free, whereas memory accesses in hardware may have high cost.

Most software and system-level models are written without a specific hardware architecture in mind. An algorithm might read a series of locations from memory (for example, a packet header), process them, and serially write them back or into another set of memory locations. This would be inefficient in hardware, so parallelism and pipelining are used. Expecting an EDA tool to take a system-level description and define the entire hardware micro-architecture automatically is beyond the current state of the art. To be sure, high-level synthesis (HLS) tools are in wide use today, but their input is only a few steps more abstract than RTL code, not a C description of the complete hardware-plus-software system. In practice, teams who use C/C++/SystemC models for architectural analysis early in the project spend a lot of effort refining those models so that HLS can handle them and produce results meeting PPA goals.

The lack of an automated flow from system to hardware means that significant portions of the verification work are still done on the RTL design. Even within the narrower range of HLS, the industry lacks effective equivalence checking from C++/SystemC to RTL. Moving verification earlier, to more abstract models, requires assurance that the downstream design is consistent and does not need to be re-verified. Logic-versus-schematic (LVS) tools gave confidence in the chip layout while RTL-to-gates equivalence checkers confirmed that logic synthesis did not alter design functionality. Unfortunately, HLS users do not have a similar solution. The result is that many verification teams focus on the RTL, especially for static and formal techniques. There may be some amount of simulation test bench reuse between HLS models and RTL, but there is typically no connection at all to the architectural level. There is even less automation for software, which is typically written expressly as programs that will be executed on the architectural model and the actual chip, and possibly on the HLS and RTL models as well.

Despite all the shortfalls in the grand vision, perhaps the industry has failed to achieve as much as it can with high-level verification. But there are opportunities for improvement. Many of the static and formal checks routinely performed on RTL designs are also entirely applicable for high-level design models. Automatic checks include:

- Out-of-bounds array accesses (security risk)

- Dead code (wasted area)

- Extra unused bits (wasted area)

- Too few bits (lost data)

- Race conditions (system instability)

- Uninitialized registers and X-propagation (unexpected behavior)

Adding C++/SystemC support to static and formal tools makes it possible to detect such problems much earlier in the design process, before running HLS. Other RTL formal applications (apps) such as connectivity checking, floating-point unit (FPU) verification, RISC-V processor verification, and protocol checking may be applicable to high-level design models as well. Technology exists to use SystemVerilog Assertions (SVA) with C++/SystemC, so formal support for limited assertion-based verification (ABV) is also available for HLS users today.

While much verification can be accomplished at the high level, the lack of equivalence checking means that automatic checks, formal apps, and ABV must also be run on the RTL code. Since most bugs are detected before HLS, the RTL runs should be fairly clean. Verification engineers may add more assertions or run additional formal apps at this stage, so some new bugs might be discovered. Regardless, high-level verification is a major boost for the overall chip project. It provides earlier detection and correction of bugs, more efficient HLS, significantly reduced effort at the RTL stage, and a high-level design and verification flow a few steps closer to the grand vision.

For more information on the OneSpin SystemC/C++ Solution, download a flyer.

About the author

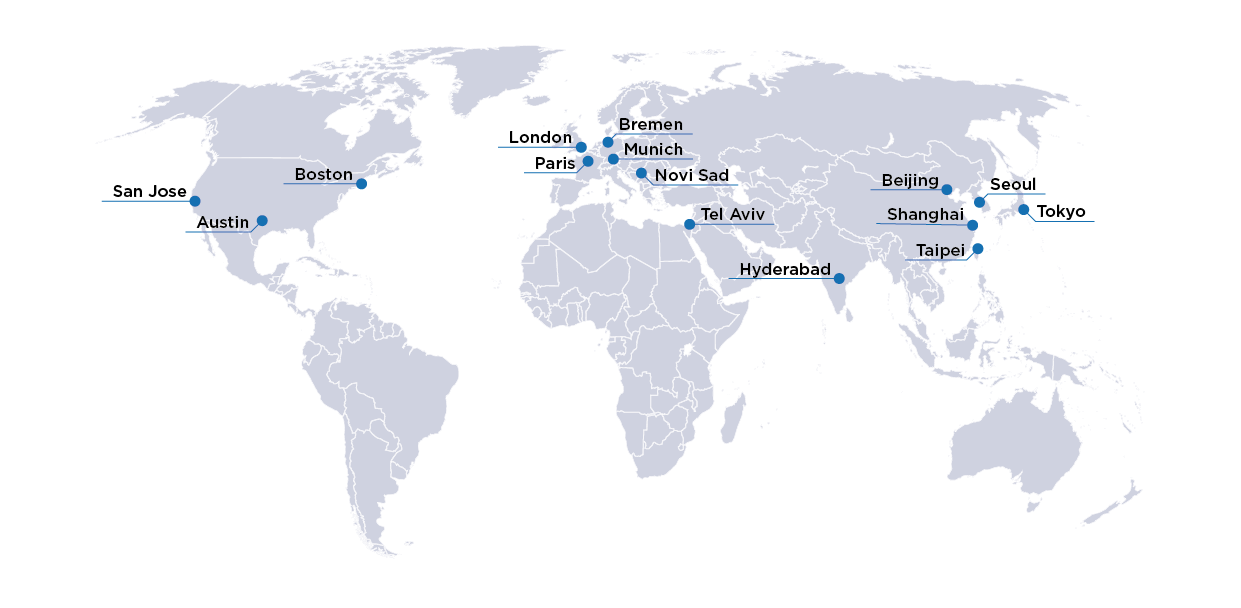

Dominik Strasser, co-founder of OneSpin Solutions, was named VP Engineering in June 2012. He has a track record of 20+ years in engineering management and engineering.

Since 2005 he headed the development of OneSpin's flagship product 360DV. Prior to OneSpin, Strasser led the formal verification development group at Infineon Technologies in Munich. Previously, at Siemens, he led industrial research projects on formal verification and industrial computing at the company's Corporate Research Lab in Germany. He began his career in the compiler department of Siemens Nixdorf doing C and C++ compilers for Unix and mainframe operating systems.